Jacaranda Bundle

- 蓝花楹全家桶

- Jacaranda is a monorepo for

@kitmi/jacarandaJavaScript application framework & its relavant utility libraries. - 70%+ of the documents are generated or modified by GPT-4.

Packages

-

@kitmi/jacaranda- A rich-feature JavaScript CLI application and http server application framework with plugable features. It supports to run by both

node.jsandbun.sh, and switch betweenkoa.js(stable) andhono.js(high performance) as the http engine freely. - See Manual

- A rich-feature JavaScript CLI application and http server application framework with plugable features. It supports to run by both

-

@kitmi/validators- A dynamic validation library designed to validate objects using a declarative syntax known as Jacaranda Object Modifiers Syntax (JOMS). It allows for dynamic validation strategies by using various types of modifiers that can be combined to form complex validation rules.

- See Manual

-

@kitmi/tester- A JavaScript unit test tool with in-server code coverage, benchmark, profiling, test report generation, async dump and etc.

- See Manual

-

@kitmi/config- This library provides a simple and flexible way to manage configuration settings for applications across different environments. It supports both JSON and YAML file formats and automatically selects the appropriate configuration based on the current environment. It also supports config values interpolation.

- See Manual

-

@kitmi/utils- A JavaScript common utilities library that enhances lodash with additional functions for manipulating text, URLs, arrays, objects, and names.

- See Manual

-

@kitmi/types- This is a fundamental library that defines semantic data types with serialization, validation and sanitization. It also contains common errors definition.

- See Manual

-

@kitmi/sys- This is a small collection of utility functions designed to interact with the local system using JavaScript. It is designed to work with both

node.jsandbun.sh. - See Manual

- This is a small collection of utility functions designed to interact with the local system using JavaScript. It is designed to work with both

-

@kitmi/algo- A lightweight JavaScript library for various algorithms and data structures, focusing on graph and tree operations. It provides efficient implementations of Breadth-First Search (BFS), Depth-First Search (DFS), Topological Sorting, Finite State Machines (FSM), and representations for graphs and trees, among other utilities.

- See Manual

-

@kitmi/jsonv- JSON Validation Syntax library

- See Manual

-

@kitmi/jsonx- JSON Expression Syntax library

- See Manual

-

@kitmi/adapters- This library provides a unified interface for interacting with various components that share similar functionalities but may have different underlying implementations or interfaces. By using this library, developers can switch between different components such as HTTP clients or JavaScript package managers without changing the consuming codebase, making the code more modular and easier to maintain.

- See Manual

-

@kitmi/data- This library is the data access layer of the Jacaranda Framework, designed to provide both ORM and non-ORM approaches for database interactions. It encapsulates SQL operations, connection pool management, and routine CRUD (Create, Read, Update, Delete) operations, ensuring efficient and scalable data handling. By offering a flexible and powerful API, @kitmi/data simplifies the complexities of database management, allowing developers to focus on building robust applications without worrying about underlying data access intricacies.

- See Manual

Command-lines

@kitmi/xeml- Jacaranda data entity modeling tool

- See Manual

License

- MIT

- Copyright (c) 2023 KITMI PTY LTD

Jacaranda Types & Validation

In the realm of web development, JavaScript has long been the cornerstone language, driving both client-side and server-side interactions. However, JavaScript's type system is fundamentally based on variable types rather than the semantics of the values they hold. This discrepancy can lead to challenges when developers need to work with data that has a specific business context.

For instance, JavaScript treats a string containing a number ("123") as a string type, even though, semantically, it might represent an integer in a business context. Similarly, a date represented as a string ("2021-01-01") remains a string type, despite its semantic meaning as a date.

This discrepancy between type recognition and business logic necessitates a semantic type system that aligns with the context in which data is used. The @kitmi/types library addresses this need by defining semantic data types that include validation and sanitization, tailored to business logic rather than just the form of data. It allows for the creation of an independent and extensible type system that can be customized to fit the unique requirements of a business application.

Semantic Type System with Validation and Sanitization

The @kitmi/types library is not just a collection of predefined data types; it also allows the creation of an independent and extensible type system. This flexibility is crucial for business applications where the context and semantics of data are paramount. By defining types semantically, developers can ensure that data conforms to business rules and expectations, such as treating a numeric string as an integer or a date string as a datetime object.

Building on the capabilities of @kitmi/types, the @kitmi/validators library introduces a postProcess hook to perform validations and transformations based on the JSON Type Modifiers Syntax (JTMS). This approach enables dynamic and complex validation strategies that are defined using data descriptors rather than code, allowing for the configuration and storage of these rules in a standardized format.

Conventions and Types

The @kitmi/types library defines a variety of types, including

anyarraybigintbinarybooleandatetimeintegernumberobjecttextEach type follows a specific interface that includes a name, aliases, defaultValue, sanitize, and serialize method.

Type Metadata

The type interface provides a blueprint for how each type should be structured. Common metadata properties such as plain, optional, and default allow for additional customization of how values are processed.

Enumerable types (like bigint, integer, number, and text) have a enum property used for specifying a set of allowed values.

Object type has a schema object property (can also be a functor to return a schema object) used for specifying the schema used to verify and process the object value.

Array type has a element object property (can also be a functor to return a schema object) used for specifying the schema used to verify and process its element value.

Binary type has a encoding text property.

Datetime type has a format text property.

Note: some more specific properties may not be covered here.

Plugins

The @kitmi/types library also supports plugins as serializer, deserializer, pre-processor, post-processor.

Dynamic Validation with Declarative Syntax

Building on the foundation of @kitmi/types, the @kitmi/validators library introduces a dynamic validation system using the JSON Type Modifiers Syntax (JTMS). This declarative syntax allows developers to specify complex validation rules by combining different types of modifiers.

Modifier Syntax

Modifiers in JTMS can be standalone or require arguments, with the latter being expressed in object or array style.

- Standalone Modifiers: "

", e.g. ~ip,~email,~strongPassword - Modifiers with Arguments: These can be expressed either as objects or arrays:

- Object Style:

name: Modifier name with prefix (e.g.,~mobile)options: Arguments for the modifier (e.g.,{ locale: 'en-US' })

- Two-tuple Array Style:

- Index 0: Modifier name with prefix

- Index 1: Modifier options argument

- Object Style:

Types of Modifiers

There are three types of modifiers with different prefix symbols:

- Validator (

~): Validates the value. - Processor (

>): Transforms the value. - Activator (

=): Provides a value if the current value is null.

Sample

An optional config object for koa is described with JTMS as below:

{

"type": "object",

"schema": {

"trustProxy": { "type": "boolean", "optional": true },

"subdomainOffset": { "type": "integer", "optional": true, "post": [["~min", 2]] },

"port": { "type": "integer", "optional": true, "default": 2331 },

"keys": [ // match any one of

{

"type": "text"

},

{

"type": "array",

"optional": true,

"element": { "type": "text" },

"post": [["~minLength", 1]]

}

]

},

"optional": true

}

Note: the keys property above can be one of a text value or an array of text with at least one element.

Why Not Use Code-Based Validation Libraries?

While libraries like Joi or Yup provide powerful code-based solutions for data validation, @kitmi/validators takes a different approach by using data to describe data formats. This methodology shifts the focus from writing validation code to defining data formats and rules as configurations. As a result, data format definitions, validations, and even processing rules become standardized and can be managed as configurations, enhancing reusability and maintainability.

Validators and Processors Extension

Furthermore, @kitmi/validators incorporates the @kitmi/jsonv and @kitmi/jsonx libraries, which introduce a series of validation and transformation operators inspired by MongoDB query operators. The @kitmi/jsonv library is utilized through the ~jsv validator, and @kitmi/jsonx is applied via the |jsx processor within JTMS. This integration equips @kitmi/validators with a robust set of validators and processors capable of not just validating data but also transforming it, thus creating a comprehensive data processing pipeline.

Use JTMS to Describe JTMS

type: object,

schema:

type:

type: text

enum:

- "any"

- "array"

- "bigint"

- "binary"

- "boolean"

- "datetime"

- "integer"

- "number"

- "object"

plain:

type: boolean

optional: true

optional

type: boolean

optional: true

default:

type: any

optional: true

enum

onlyWhen:

$$PARENT.type:

$in:

- "bigint"

- "integer"

- "number"

- "text"

type: array

element:

type: '$$'

Reflection-Based Features in @kitmi/jacaranda

The @kitmi/jacaranda framework is an advanced JavaScript application framework designed to facilitate the development of both command-line interface (CLI) and HTTP server applications. It is compatible with node.js and bun.sh, and offers the flexibility to choose between koa.js and hono.js for the HTTP engine, catering to the needs for stability and high performance respectively.

Core Concepts

At the heart of @kitmi/jacaranda lies a reflection-based feature system coupled with a dependency-injection pattern. This design philosophy ensures modularity and a clear separation of concerns, which are essential for building scalable and maintainable applications.

Reflection-Based Feature System

The framework treats each top-level node in the configuration file as a distinct feature. This approach allows developers to modularize their application by encapsulating specific functionalities within self-contained features. Each feature is responsible for a particular aspect of the application, such as configuration, logging, or internationalization.

Features in @kitmi/jacaranda are loaded in a specific order, following the stages of Config, Initial, Services, Plugins, and Final. This ordered loading is further refined by dependency relations declared among features, ensuring that dependencies are resolved before a feature is initialized. Topological sorting is employed to manage the loading sequence of features that share the same stage.

Moreover, the framework supports both built-in features and custom features placed under the application's features directory. This directory is configurable, allowing developers to structure their application as they see fit. Features can also declare their required npm packages, and the framework provides a script to install these dependencies using the developer's preferred package manager.

Dependency Injection Pattern

@kitmi/jacaranda embraces dependency injection as a core pattern for managing feature dependencies. Each feature acts as an injected dependency, which can be consumed by other parts of the application. This pattern promotes loose coupling and high cohesion, making the application easier to test and maintain.

A feature can either register a service or extend the app prototype. Registering a service is the recommended approach as it aligns with the principles of dependency injection and service-oriented architecture. By registering services, features expose their functionalities to the rest of the application in a decoupled manner.

The service registry is a critical component of the dependency injection system. It maintains a registry of all available services, allowing features to declare their dependencies explicitly. When a feature requires a service, it retrieves the service instance from the registry, rather than creating a direct dependency. This approach simplifies the management of feature interactions and dependencies.

Feature Develop Guideline

export default {

stage: 'Services', // 5 stages: Config, Initial, Services, Plugins, Final

groupable: true, // optinonal, true or false, whether it can be grouped by serviceGroup, usually means multiple instances of this services are allowed

packages: [], // required packages to be installed bofore starting the server

depends: [], // other features as dependencies

load_: async function (app, options, name) {} // feature loader, usually register the service instance under the given name (when grouped will be suffixed with instance id)

};

Registry system

Global runtime registry

import { runtime } from '@kitmi/jacaranda';

import koaPassport from 'koa-passport';

import pg from 'pg';

runtime.loadModule('pg', pg);

runtime.loadModule('koa-passport', koaPassport);

Module-specific registry

In the export default entries of each app modules.

export default {

...,

registry: {

models,

features: {

...features,

},

middlewares: appMiddlewares,

controllers: {

actions,

resources,

},

},

};

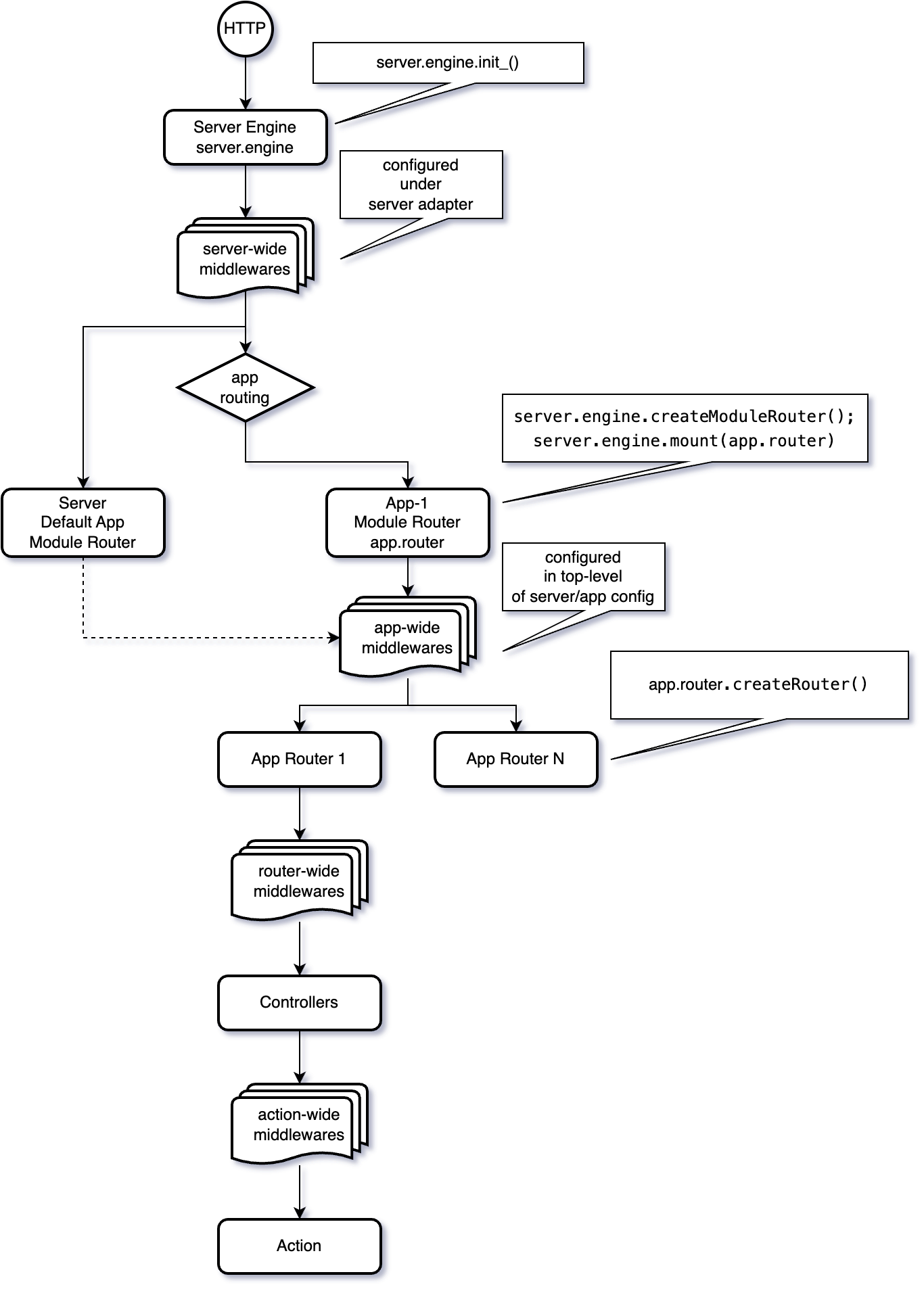

@kitmi/jacaranda Server Routing

Server & App

A server hosts app modules and library modules.

Request Handling Flow

App Modules and Library Modules

-

Library Module

- Library module usually provide common business logic or common data access models for other app modules.

- Library module does not provide external access interface.

-

App Module

- App module usually has routing config to provide external access interface with certain kind of router.

Hereafter, app refers to an app module.

Interoperbility

Access lib from app

app.host.getLib('<lib name>')

Access app from another app

- by route

// suppose the target app is mounted at /cms

app.host.getAppByRoute('/cms')

@kitmi/jacaranda Module Loading Helper

Overview

In the Jacaranda Framework, managing and loading modules can be complex, especially when dealing with different package management tools and the modular structure of projects. Modules might reside outside the project's working directory, creating challenges in locating and loading them efficiently. The Module Loader Helper in Jacaranda addresses these challenges by providing a unified way to load modules from various sources.

Module Loader Helper

Usage

The loadModuleFrom_ function is the core utility provided by the Jacaranda Framework for loading modules. It supports various sources, ensuring that modules can be loaded from different locations as needed.

import { loadModuleFrom_ } from '@kitmi/jacaranda';

const moduleToLoad = await loadModuleFrom_(app, source, moduleName, payloadPath);

// source can be 'runtime', 'registry', 'direct', 'project'

Parameters

app: The current application instance.source: The source from which the module should be loaded. It can be one of 'runtime', 'registry', 'direct', or 'project'.moduleName: The name of the module to load.payloadPath: The path to the module's payload, if applicable.

From Different Sources

runtime

Load module from the Global Runtime Registry.

Server calls runtime.loadModule during bootstrapping to inject the module instance into the global runtime registry.

runtime.loadModule('<module-full-path>', module);

registry

Load module from the App Module Specific Registry.

The app module itself preloads module instances into the app's own registry in the module entry file.

export default {

...,

registry: {

...,

},

};

direct

Load module directly by calling require with esmCheck.

project

Load module from the project's working path.

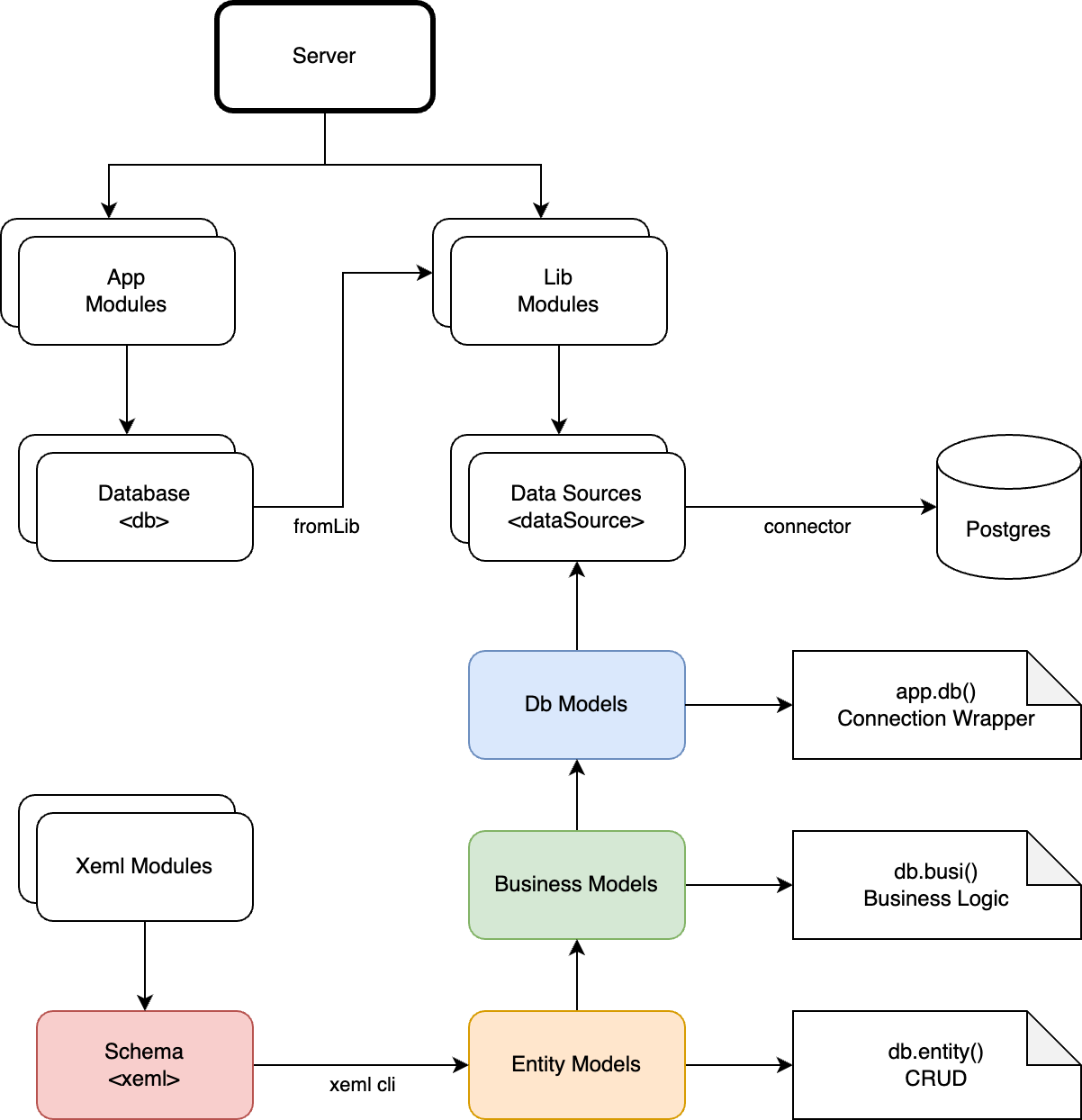

Jacarana Data Access Models

Overview

This document outlines the design of the Jacarana Data Access Models for a Node.js backend system, focusing on the interaction between connectors, entity models, database models, and business logic classes. The architecture ensures a clean, extensible, and maintainable codebase, leveraging modern JavaScript features like async/await and Proxies.

Base Components

- Connector: Manages database connections and connection pools.

- DbModel: Base class for database interactions, managing connections and transactions.

- EntityModel: Base class for ORM entities, encapsulates data through a proxy.

- BusinessLogic: Encapsulates the business logic of the system, interacting with one or more EntityModels and managing transactions.

Specific database features can be implemented in subclasses inheriting from the above base components.

E.g.

- PostgresConnector: Leverages

pgpackage to manage connections and connection pools to PostgreSQL database. - PostgresEntityModel: Supports special query syntax of PostgreSQL, e.g.,

ANY,ALL. - PostgresDbModel: Supports multiple schemas inside a database.

Connector

The Connector class is responsible for managing connections to the database.

Interface

class Connector {

async connect_(); // Get a connection from the pool or create a new one, depending on the driver

async disconnect_(); // Release the connection back to the pool or close it

async end_(); // Close all connections in the pool

async ping_(); // Ping the database

async execute_(); // Execute a query

async beginTransaction_(); // Begin a transaction

async commit_(); // Commit a transaction

async rollback_(); // Rollback a transaction

async create_(model, data, options, connection) -> ({ data, affectedRows })

async find_(model, options, connection) -> ({ data })

async update_(model, data, options, connection) -> ({ data, affectedRows })

async delete_(model, options, connection) -> ({ data, affectedRows })

async upsert_(model, data, uniqueKeys, dataOnInsert, options, connection) -> ({ data, affectedRows })

}

EntityModel

The EntityModel class serves as a base class for data entities with static meta providing metadata. EntityModel instance itself does not save any data since JS always handles data in the form of JSON and it's not necessary to implement an ActiveRecord-like class.

Interface

class EntityModel {

get meta(); // Entity metadata, { name, keyField, associations, features }

async findOne_(criteria); // Implement find one logic

async findMany_(criteria); // Implement find many logic

async findAll_(); // Implement find all logic

async createOne_(data); // Implement create one logic

async createMany_(dataArray); // Implement create many logic

async updateOne_(criteria, data); // Implement update one logic

async deleteOne_(criteria); // Implement delete one logic

}

DbModel

The DbModel class manages the lifecycle of a connection created from the connector, and all EntityModel instances are created from DbModel. DbModel uses Proxy to delegate PascalCase getter to the entity(getterName) method.

Interface

class DbModel {

get meta(); // Database metadata, { schemaName, Entities }

entity(name); // Get an entity instance

async transaction_(async function(anotherDbInstance));

}

BusinessLogic

The BusinessLogic class encapsulates the business logic of the system. It interacts with one or more EntityModel instances and manages transactions to complete a business operation.

Interface

class BusinessLogic {

constructor(db) {

this.db = db;

}

async validateUserPassword(username, password) {

// Example business operation logic

await this.db.transaction_(async (db) => { // !! the passed in db instance is different with this.db

const user = await db.entity('User').findOne_({ username });

// verify user password with hashed password

});

}

// Other business logic methods

}

Usage

- A default

DbModelinstancedbcan be retrieved from the Jacarana App instance.

const db = app.db('db name');

const businessLogic = new BusinessLogic(db);

- For a normal query

const User = db.entity('User');

const user = await User.findOne_({ id: 1837 });

Summary

This architecture provides a robust and flexible foundation for database access and management, supporting multiple database types and schemas, and enabling seamless integration of data operations with transaction management. The introduction of the BusinessLogic layer ensures that business operations are encapsulated, maintainable, and scalable. The use of async interfaces and proxies ensures modern, efficient, and maintainable code.

@kitmi/data

Jacaranda Framework Data Access Layer

Built-in Jacaranda Features

Below features come with @kitmi/data can be configured in both @kitmi/jacaranda server config file and app module config file.

dataSource- Defines connector of a database

Note: For postgres, different postgres schema are considered different data source in this framework.

-

dataModel- To be used by@kitmi/xemlfor code generation -

db- Maps of schema name to data source defined by dataSource feature

Note: Schema in this framework is a collection of entities and it can be defined as a subset of a database. Different schemas can use the same dataSource connector.

Sample config:

dataSource:

postgres:

testConnector:

connection: 'postgres://postgres:postgres@localhost:5432/jc-testdb'

adminCredential:

username: 'postgres'

password: 'postgres'

logStatement: true

logConnection: false

logTransaction: true

dataModel:

schemaPath: './xeml'

schemaSet:

test:

dataSource: postgres.testConnector

test2:

dataSource: postgres.testConnector

dependencies:

commons: '@xgent/xem-commons'

db:

test:

dataSource: postgres.testConnector

test2:

dataSource: postgres.testConnector

Basic Usage

Supposed all the entity models are generated by @kitmi/xeml without errors and database has been migrated to the latest state.

The db feature will inject a db function into the app object.

The db function signature is as below.

app.db([schema-name]) -> DbModel;

The schema-name is optional and will use the value of app.settings.defaultDb as the default schema. The value of app.settings.defaultDb can be configured in the app's config as below:

settings:

defaultDb: test

The db feature can also reference to a data source defined in a library module.

db:

test:

fromLib: '<name of the library defines the data source>'

dataSource: postgres.testConnector

When an app with the db feature enabled, database operation can be done as below:

const db = app.db('db name'); // or app.db() for default db configured in app's settings

// ctx.db is a shorthand of ctx.module.db, ctx.module is the app instance serving the request

// or db.entity('user') or db.User

const User = db.entity('User');

// or await User.findOne_({ id: 1011 });

// or await User.findOne_(1011) if 1011 is the value of User's primary key;

const user = await User.findOne_({ $where: { id: 1011 } });

const user = await User.updateOne_({ name: 'New name' }, { $where: { id: 1011 } });

// ...

CRUD Operations

-

Retrieval

- async findOne_(findOptions)

- async findMany_(findOptions)

- async findManyByPage_(findOptions, page, rowsPerPage)

-

Creation

- async create_(data, createOptions)

- async createFrom_(findOptions, columnMapping)

-

Update

- async updateOne_(data, updateOptions)

- async updateMany_(data, updateOptions)

Note: regarding the data, please refers to Data to Update

-

Deletion

- async deleteOne_(deleteOptions)

- async deleteMany_(deleteOptions)

- async deleteAll_()

-

Not implemented yet

- async createMany_(data /* array of object */, createOptions)

- async createMany_(fieldNames, data /* array of array */, createOptions)

- async aggregate_(...)

- async cached_(...)

Hooks

- async beforeCreate_(context) -> boolean

- async beforeUpdate_(context) -> boolean

- async beforeUpdateMany_(context) -> boolean

- async beforeDelete_(context) -> boolean

- async beforeDeleteMany_(context) -> boolean

- async afterCreate_(context) -> boolean

- async afterUpdate_(context) -> boolean

- async afterUpdateMany_(context) -> boolean

- async afterDelete_(context) -> boolean

- async afterDeleteMany_(context) -> boolean

Operation Options

Common Options

$ctx

The koa like ctx object passed in to interpolate into query condition, will also be passed on to associated operation.

- session

- request

- header

- state

$skipUniqueCheck

To skip unique check for $where object when performing xxxOne_ operation.

$key

The key field of the entity.

$noLog

Skip logging SQL statement for certain operation.

findOptions

$select

- Select by dot-separated field name (syntax: [

.] )

$select: [

'*',

'user.* -password -passwordSalt', // with exclusions

'offices.bookableResources.type'

]

// SELECT A.*, X.`type` ... JOIN `OfficeBookableResource` as X

Note: The output columns may have some automatically added fields especially keys of different layers for building the hierachy structure

- Select by function

$select: [ { $xr: 'Function', name: 'MAX', alias: 'max', args: ['order'] } ]

// SELECT MAX(`order`) as max

- Using the xrXXX helpers.

import { xrCall, xrCol } from '@kitmi/data';

//...

{

$select: [ xrCall('COUNT', '*'), xrCall('SUM', xrCol('field1')) ]

// SELECT COUNT(*), SUM("field1")

}

Helper Functions

xrCall: call a SQL function

{

$select: [ xrCall('SUM', xrCol('someField')) ] // SUM(someField)

}

xrCol: reference to a table column

Difference between using xrCol and string:

postgres:

xrCol('abc') -> quote_ident('abc') -> "abc"

direct string "abc" or 'abc' -> 'abc'

xrExpr: binary expression

{

$select: [ xrExpr(xrCol('someField'), '+', 1) ] // someField + 1

}

xrRaw: raw expression

Note: Directly inject into the SQL statement, should use it carefully.

xrRaw(<raw-statement>, [params])

In the raw statement, you can put a db.paramToken as the placeholders of params and separately pass the params array as the second argument.

e.g.

xrRaw(

`("testJson"->>'duration')::INTEGER > ${Book.db.paramToken} AND ("testJson"->>'duration')::INTEGER < ${Book.db.paramToken}`,

quizCount

)

xrGet: get array element or json field

xrGet(<field>, (<1-based index> | <"." separated key path>)[, <alias>])

- Others

More usage samples can be found in the Data to Update section below.

$relation

Indicate to include what associations in the query.

Note: No trailing (s).

- Use anchor name as the relationship

// use an anchor

$relation: [ 'profile', 'roles' ];

// can use multi-levels

$relation: [ 'profile.address', 'roles.role' ];

- Use custom relation that not defined in xeml

$relation: [{ alias: 'anyNode', entity: 'tagCategoryTree', joinType: 'CROSS JOIN', on: null }], //

$relation: [

...,

{

alias: 'course',

entity: 'course',

joinType: 'INNER JOIN',

on: {

'course.rootDoc': xrCol('rootDoc.knowledges.knowledge.documents.document.id'),

},

},

'course.branches.branch.subject',

...

]

$where

The where clause object.

- Condition with AND

{

$where: {

key1: value1,

key2: value2

}

// key1 == value1 AND key2 == value2

}

{

$where: {

$and: [ { key1: value1 }, { key2: value2 } ]

}

// key1 == value1 AND key2 == value2

}

- Condition with OR

{

$where: {

$or: [ { key1: value1 }, { key2: value2 } ],

$or_2: [ { key3: value3, key4: value4 }, { key5: value5 } ],

}

// (key1 == value1 OR key2 == value2) AND ((key3 == value3 AND key4 == value4) OR (key5 == value5))

}

- Condition with NOT

{

$where: {

$not: {

key1: value1,

key2: value2

}

}

// NOT (key1 == value1 AND key2 == value2)

}

- Condition with Expressions

{

$where: {

$expr: xrExpr(xrCol('someField'), '==', xrCol('someField2')),

$expr_2: xrExpr(xrCol('metadata'), '@>', obj)

}

// someField == someField2 AND metadata @> $1

// $1: obj

}

- Condition with Raw Statement

Had better put a db.paramToken as the placeholders of params and separately pass the params array as the second argument.

const duration = [10, 20];

await Book.findMany_({

$where: {

$expr: xrRaw(

`("testJson"->>'duration')::INTEGER > ${Book.db.paramToken} AND ("testJson"->>'duration')::INTEGER < ${Book.db.paramToken}`,

duration

),

},

});

Condition Operators

- $gt: >

- $lt: <

- $gte: >=

- $lte: <=

- $exist

- { $exist: true } = IS NOT NULL

- { $exist: false } = IS NULL

- $eq: ==

- $neq: <>

- $in, $notIn

- $between, $notBetween

- $startsWith: LIKE %S

- $endsWith: LIKE S%

- $like: LIKE %S%

- $fitler: jsonb_field @> filter

$orderBy

- Order by ascending

{

...,

$orderBy: 'field1' // ORDER BY field1

}

{

...,

$orderBy: [ 'field1', 'field2' ] // ORDER BY field1, field2

}

{

...,

$orderBy: { 'field1': true } // ORDER BY field1

}

{

...,

$orderBy: { 'field1': 1 } // ORDER BY field1

}

- Order by descending

{

...,

$orderBy: { 'field1': false } // ORDER BY field1 DESC

}

{

...,

$orderBy: { 'field1': -1 } // ORDER BY field1 DESC

}

- Mix

{

...,

$orderBy: { 'field1': -1, 'field2': 1 } // ORDER BY field1 DESC, field2

}

- Order by alias

When a query has joining tables, all column reference will be padding the table alias automatically, e.g. { $orderBy: 'field1' } will be converted into ORDER BY A."field1".

If you want to order by an alias which is not a column of any table, the above rule will fail the final SQL execution. A "::" prefix can be used to force the conversion to not add the table alias, i.e. { $orderBy: '::field1' } to ORDER BY "field1".

$groupBy

{

...,

$groupBy: 'field1' // GROUP BY field1

}

{

...,

$groupBy: [ 'field1', 'field2' ] // GROUP BY field1, field2

}

$offset

{

$offset: 10

}

$limit

{

$limit: 10

}

$countBy

Returns total record count

$view

Use a pre-defined view as findOptions

$includeDeleted -

To include logically deleted records

$skipOrm -

Internal use, to skip mapping the result into a nested object.

$asArray -

Return result as array, i.e. array mode.

$sqlOnly -

Only return the sql.

createOptions

$ignore -

If already exist (unique key conclicts), just ignore the operation.

$upsert -

If already exist (unique key conclicts), just update the record.

$getCreated -

Return created records.

updateOptions

$where

See $where in findOptions.

$getUpdated -

Return updated records.

createOptions & updateOptions

$bypassReadOnly -

Internal use, to bypass some read-only fields

$skipModifiers -

Skip auto generated modifiers.

$skipValidators -

Skip the specified validators

$dryRun -

Just do the entity pre-process and skip the actual db creation call.

$migration -

For migration only.

deleteOptions

$getDeleted -

Return the deleted records

$deleteAll -

To delete all records with deleteMany_

$physical -

To physically delete a record.

Data to Update

- Plain Object

const data = {

field1: 'value1',

field2: 'value2'

};

await Entity.updateOne_(data, { $where, ... });

- Special Values

xrCol- Set the value to another columnxrCall- Set the value to the result of a function callxrExpr- Set the value to the result of an expressionxrRaw- Set the value to a raw SQL statementdoInc(field, n): shorthand for field + n using xrExpr and xrColdoDec(field, n): shorthand for field - n using xrExpr and xrCol

entity.updateOne_({

version: doInc('version', 1) // version = version + 1

}, { $where: { id: xxx } })

- Special Operators

$set: { key: value [, key2: value2, ...] } for jsonb field$setAt: { at, value } for array field$setSlice: { begin, end, value } for update partial array field, value is an array

Transaction

When executing transactions, DbModel (i.e. entity.db or app.db()) will fork a new instance containing a dedicated connection for a transaction.

The business logic of the whole transaction should be wrapped in an async function and should use the newly given db object as _db in below example.

// Transaction

const ret = await this.db.transaction_(async (_db) => {

const User = _db.entity('user'); // should use _db to get entity

...

return ret;

});

Create with Associations

- 1:many as array and reference as object

const { op, data, affectedRows, insertId } = await Product.create_({

'type': 'good',

'name': 'Demo Product 2',

'desc': 'Demo Product Description 2',

'image': 'https://example.com/demo.jpg',

'thumbnail': 'https://example.com/demo-thumb.jpg',

'free': true,

'openToGuest': true,

'isPackage': false,

'hasVariants': true,

'category': 2,

':assets': [

{

'tag': 'snapshots',

':resource': {

mediaType: 'image',

url: 'https://example.com/demo-asset.jpg',

},

},

{

'tag': 'snapshots',

':resource': {

mediaType: 'image',

url: 'https://example.com/demo-asset2.jpg',

},

},

{

'tag': 'poster',

':resource': {

mediaType: 'image',

url: 'https://example.com/demo-poster.jpg',

},

},

],

':attributes': [

{

type: 'dimension',

value: '10x10x10',

},

{

type: 'origin',

value: 'China',

},

],

':variants': [

{

type: 'color',

value: 'red',

},

{

type: 'color',

value: 'blue',

},

{

type: 'color',

value: 'green',

},

{

type: 'size',

value: 'L',

},

{

type: 'size',

value: 'M',

},

{

type: 'size',

value: 'S',

},

],

});

Find with Associations

const result = await Product.findOne_({

id: insertId, // all k-v pairs without starting with $ will be pushed into $where

$select: ['*'],

$relation: ['category', 'assets.resource', 'attributes', 'variants'],

});

Update with associations

- Update 1:1 or m:1 association

- Update 1:m assoication with sub-operations

- $delete : delete existing

- $update : update existing

- $create : create more

await Product.updateOne_(

{

'name': 'Demo Product 200',

// a product has many assets

':assets': {

$delete: [existing[':assets'][0], existing[':assets'][1]],

$update: [

{

...Product.getRelatedEntity('assets').omitReadOnly(existing[':assets'][2]),

tag: 'poster2',

},

],

$create: [

{

'tag': 'poster',

':resource': {

mediaType: 'image',

url: 'https://example.com/demo-poster2.jpg',

},

},

],

},

},

{ $where: { id: insertId }, $getUpdated: true }

);

License

- MIT

- Copyright (c) 2023 KITMI PTY LTD

@kitmi/data How-To

INSERT INTO ... SELECT ...

await ClosureTable.createFrom_(

{

$select: [

'ancestorId',

'anyNode.descendantId',

xrAlias(xrExpr(xrExpr(xrCol('depth'), '+', xrCol('anyNode.depth')), '+', 1), 'depth'),

],

$where: { 'descendantId': parentId, 'anyNode.ancestorId': childId },

$relation: [{ alias: 'anyNode', entity: 'tagCategoryTree', joinType: 'CROSS JOIN', on: null }],

$upsert: { depth: xrCall('LEAST', xrCol('depth'), xrCol('EXCLUDED.depth')) },

},

{

'ancestorId': 'ancestorId',

'anyNode.descendantId': 'descendantId',

'::depth': 'depth',

}

);

// INSERT INTO "tagCategoryTree" ("ancestorId","descendantId","depth") SELECT A."ancestorId", anyNode."descendantId", ((A."depth" + anyNode."depth") + $1) AS "depth" FROM "tagCategoryTree" A , "tagCategoryTree" anyNode WHERE A."descendantId" = $2 AND anyNode."ancestorId" = $3 ON CONFLICT ("ancestorId","descendantId") DO UPDATE SET "depth" = LEAST("tagCategoryTree"."depth",EXCLUDED."depth")

WHERE xxx IN (SELECT ...)

await TagCategoryTree.deleteMany_({

$where: {

descendantId: {

$in: xrDataSet(TagCategoryTree.meta.name, {

$select: ['descendantId'],

$where: { ancestorId: keyValue },

}),

},

}

});

// DELETE FROM "tagCategoryTree" WHERE "descendantId" IN (SELECT "descendantId" FROM "tagCategoryTree" WHERE "ancestorId" = $1)

Custom join and group by through skipping orm

const ret = await Video.findMany_({

$select: [xrCall('COUNT', xrCol('rootDoc.taggings.tag.id'))],

$relation: [

'rootDoc.knowledges.knowledge.documents.document',

'rootDoc.taggings.tag',

{

alias: 'course',

entity: 'course',

joinType: 'INNER JOIN',

on: {

'course.rootDoc': xrCol('rootDoc.knowledges.knowledge.documents.document.id'),

},

},

'course.branches.branch.subject',

],

$where: {

'rootDoc.taggings.tag.id': { $in: [7, 8] },

'course.branches.branch.subject.id': { $in: [1, 2] },

'course.branches.branch.id': { $in: [1, 2] },

'course.id': { $in: [1, 2] },

},

$groupBy: ['rootDoc.taggings.tag.id'],

$skipOrm: true,

});

// SELECT COUNT(H."id") FROM "video" A LEFT JOIN "document" B ON A."rootDoc" = B."id" LEFT JOIN "documentKnowledge" C ON B."id" = C."document" LEFT JOIN "knowledgeChip" D ON C."knowledge" = D."id" LEFT JOIN "documentKnowledge" E ON D."id" = E."knowledge" LEFT JOIN "document" F ON E."document" = F."id" LEFT JOIN "documentTagging" G ON B."id" = G."entity" LEFT JOIN "tag" H ON G."tag" = H."id" INNER JOIN "course" course ON A."rootDoc" = F."id" LEFT JOIN "branchCourse" I ON course."id" = I."course" LEFT JOIN "subjectBranch" J ON I."branch" = J."id" LEFT JOIN "subject" K ON J."subject" = K."id" WHERE H."id" = ANY ($1) AND K."id" = ANY ($2) AND J."id" = ANY ($3) AND course."id" = ANY ($4) AND A."isDeleted" <> $5 GROUP BY H."id"

UPDATE ... SET xxx = CASE WHEN EXISTS ... END

const { affectedRows } = await UuidSequence.updateOne_(

{

status: {

$case: {

$when: { $expr: { $exist: xrDataSet(entity, { $select: [1], $where: { [field]: uuid } }) } },

$then: 'used',

$else: 'new',

},

},

},

{

$where: {

uuid,

status: 'fetched',

},

}

);

// UPDATE "uuidSequence" SET "status" = CASE WHEN (EXISTS (SELECT 1 FROM "address" WHERE "ref" = $1 AND "isDeleted" <> $2)) THEN $3::uuidSequenceStatus ELSE $4::uuidSequenceStatus END WHERE "uuid" = $5 AND "status" = $6

Query by view

const { data, totalCount } = await Project.findManyByPage_({ $view: 'listItem', $where: where }, page, records);

@kitmi/tester

Unit Test Utility

@kitmi/tester is a JavaScript unit test utility with api code coverage, async dump for tracking async leak.

Features

- Support coverage test of @kitmi/jacaranda applicaiton

- Support allure report

- Support async dump for debugging application hanging issue caused by pending async event

- Support @kitmi/jacaranda worker

- Support authencation protected api test

- Support JSON Validation Syntax

- Support configurable test case on/off switches

- Support profiling

- Support benchmark

- Support test step and progress record

- Support job pipeline for long-run test

Interface

gobal object jacat, or can also be imported by

import { jacat } from '@kitmi/tester';

-

startServer_(serverName?): start a server with options specified by serverName in the test config -

startWorker_(name?, async app => {/* test to run */}, options): start a worker -

withClient_(serverName?, authentication, async (client, server) => {/* test to run */}, options?): // start a worker and create a http client -

benchmark_(mapOfMethods, verifier, payload): // run benchmark againest several different implementions of the same purposes -

profile_(name, async () => { //test }): // run profiling againest a test function -

step_(name, fn): // test step -

param(name, value): // record param used in a test into test report -

attach(name, value): // attach object produced during a test into test report

Usage

1. add .mocharc.js to the project root

require('@swc-node/register'); // for esmodule and commonjs hybid mode

require('@kitmi/utils/testRegister'); // adding should and expect dialects for chai

module.exports = {

timeout: 300000,

require: ['@kitmi/tester'], // for bootstrapping tester

reporter: 'mocha-multi', // for combining console reporter and allure reporter

reporterOptions: 'mocha-multi=test/mocha-multi-reporters.json', // as above

};

2. add test/mocha-multi-reporters.json config

{

"spec": {

"stdout": "-",

"options": {

"verbose": true

}

},

"allure-mocha": {

"stdout": "-",

"options": {

"resultsDir": "./allure-results"

}

}

}

3. add test/test.config.json config

{

"skip": {

"suites": {}

},

"enableAsyncDump": false,

"enableAllure": true,

"servers": {

"server1": { // server options

"configPath": "test/conf",

"controllersPath": "test/actions",

"sourcePath": "./",

"logLevel": "info"

},

"server2": "src/server.js" // server entry file

},

"workers": {

"tester": { // worker options

"configName": "test",

"configPath": "test/conf"

},

"test2": "src/test2.js" // worker entry file

},

"authentications": {

"client1": {

"loginType": "password",

"accessType": "jwt",

"loginOptions": {

"endpoint": "/login",

"username": "user",

"password": "pass"

}

}

}

}

4. write test cases

More examples refers to test/*.spec.js.

describe('test1', function () {

it('should pass1', function () {

expect(true).to.be.true;

});

it('should pass2', function () {

expect(true).to.be.true;

jacat.attach('test2 result', {

key: 'tesst',

key2: 'tesst',

key3: 'tesst',

});

});

it('should pass async', async function () {

await jacat.step_('step1', async () => {

await new Promise((resolve) => setTimeout(resolve, 100));

});

expect(true).to.be.true;

});

});

5. run test cases

mocha --recursive test/**/*.spec.js

6. generate test report

allure generate allure-results --clean -o allure-report && serve ./allure-report

7. run code coverage test and report

nyc --reporter=html --reporter=text mocha --recursive test/**/*.spec.js && open ./coverage/index.html

API test

Authentication

- loginType

- password

- accessType

- jwt

-

loginOptions:

{

"authentications": {

"client1": {

"loginType": "password",

"accessType": "jwt",

"loginOptions": {

"endpoint": "/login",

"username": "user",

"password": "pass"

}

}

}

}

it('/test/protected ok', async function () {

await jacat.withClient_('server1', 'client1', async (client, server) => {

const res = await client.get('/test/protected');

expect(res).to.deep.equal({ status: 'ok' });

});

});

XEML Entity Definition Guide

XEML (XGENT.ai Entity Modeling Language).

Overview

XEML is a domain-specific language for defining data entities, their relationships, and behaviors. It provides a concise way to describe database schemas, validation rules, and data transformations.

Entity Structure

An entity definition in XEML typically contains the following sections, recommended to be defined in this order:

- with: Feature definitions that provide special capabilities to the entity and add required fields

- has: Field definitions

- associations: Relationship definitions

- key: Primary key definition (currently only supports single primary key)

- index: Non-foreign key indexes (foreign keys are automatically added in relationship definitions)

- views: Common output view dataset definitions (predefined field selections and joined relationships)

- modifiers: Entity owned modifiers (validator, processor and activator) definitions (e.g.

passwordHasheras a processor) - data: Predefined initialization data (excluding test data, which can be added manually in the migration directory)

Inheritance

XEML supports inheritance for the following definitions:

- features

- fields

- key

- index

- views

- associations (when a relationship is inherited, the left side is automatically modified to the current entity)

- modifiers

Multiple inheritance is supported, with definitions applied in reverse order:

entity A extends B, C

In this example, A first inherits definitions from C, then from B.

XEML also supports entity templates with generic parameters:

// A closureTable template

entity closureTable(IdType)

with

autoId

has

ancestorId : IdType

descendantId : IdType

depth : integer

index

[ ancestorId, descendantId ] is unique

// Using the template

entity documentTable extends closureTable(bigint)

associations

refers to document on ancestorId

refers to document on descendantId

Features

Features provide special capabilities to entities and add required fields. They are treated specially in the database access layer.

Syntax

with

<feature_name>["(" [ <optional_parameters> ] ")"]

...

Examples

with

autoId

createTimestamp

updateTimestamp

changeLog

With parameters:

with

autoId({ type: 'bigint' })

or

with

autoId({ type: 'uuid' })

Available Features

-

atLeastOneNotNull: Automatically checks that at least one of the specified fields is not null

- Parameters:

fields- Array or string of field names to check

- Parameters:

-

autoId: Auto-incrementing or auto-generated ID field

- Parameters:

options- Optional configuration object or string (field name)name(default: 'id') - Field nametype(default: 'integer') - Field type ('integer', 'bigint', 'uuid')startFrom- Starting value for auto-increment (for integer/bigint types)

- Parameters:

-

changeLog: Keeps track of changes made to the entity

- Parameters:

options- Optional configuration objectstoreEntity(default: 'changeLog') - Entity to store change logs

- Parameters:

-

createAfter: Automatically creates associated entity after the main entity is created

- Parameters:

relation, initDatarelation- Target associated entity anchorinitData- Optional initial data for the associated entity

- Parameters:

-

createBefore: Automatically creates associated entity before the main entity is created

- Parameters:

relation, initDatarelation- Target associated entity anchorinitData- Optional initial data for the associated entity

- Parameters:

-

createTimestamp: Saves record creation time

- Parameters:

options- Optional configuration object or string (field name)name(default: 'createdAt') - Field name- Other datetime field properties

- Parameters:

-

hasClosureTable: Automatically creates self-referencing closure table record with depth being 0

- Parameters:

closureTable, orderFieldclosureTable- Associated closure table nameorderField- Optional field for ordering

- Parameters:

-

i18n: Multilingual support

- Parameters:

optionsfield- Field name to internationalizelocales- Locale mapping object

- Parameters:

-

isCacheTable: Cache table feature with optional auto expiry

- Parameters:

autoExpiryautoExpiry- Optional auto expiry configuration

- Parameters:

-

logicalDeletion: Logical deletion

- Parameters:

options- Optional configuration object or string (field name)- When string: Name for the deletion flag field (default: 'isDeleted')

- When object:

{fieldName: value}- Field and value to indicate deletion

- Parameters:

-

stateTracking: Tracks changes to state fields with

enumproperty, recording state change times- Parameters:

optionsfield- Field name with enum values to trackreversible- Whether state changes can be reversed

- Parameters:

-

updateTimestamp: Saves record update time

- Parameters:

options- Optional configuration object or string (field name)name(default: 'updatedAt') - Field name- Other datetime field properties

- Parameters:

-

userEditTracking: Tracks user edits, recording the users who created and updated records

- Parameters:

optionsuserEntity(default: 'user') - User entity nameuidSource(default: 'state.user.id') - Source of user IDtrackCreate(default: 'createdBy') - Field to track creatortrackUpdate(default: 'updatedBy') - Field to track updaterrevisionField(default: 'revision') - Field to track revision numberaddFieldsOnly(default: false) - Only add fields without trackingmigrationUser- User ID for migration

- Parameters:

Each feature provides specific functionality that can be applied to extend entity behavior in entity definitions. These features typically add additional fields, associations, or validation rules to entities.

Field Definitions

Basic Field Types

- array: Array/list of values

- binary, blob, buffer: Binary data

- boolean, bool: Boolean values

- datetime, timestamp: Date and time values

- integer, int: Integer numbers

- bigint: Large integers

- number, float, decimal: Decimal numbers

- object, json: JSON objects

- string, text: Text values

Field Qualifiers

Common Qualifiers

- code: Reserved for database field name (currently uses the model-defined name by default)

- optional: Indicates the field is optional

- default: Default value

- auto: Field value generated automatically (implementation varies by database)

- autoByDb: True if generated by database internal mechanism

- updateByDb: True if field is automatically set by DB during updates

- fillByRule: Marks field to be filled when executing rules

- readOnly: Read-only field, typically provided by rules or database

- writeOnce: Field that can be written only once

- forceUpdate: Field updated on every entity modification

- freezeAfterNonDefault: Field locked after value changed to non-default

- -- "comment": Database field comment (requires quotes)

- displayName: Display name for the field

- constraintOnUpdate: Constraint behavior on update

- constraintOnDelete: Constraint behavior on delete

Type-Specific Qualifiers

Different field types support different qualifiers:

- array: csv, delimiter, element, fixedLength, vector

- bigint: enum, unsigned

- binary: encoding, fixedLength, maxLength

- datetime: enum, format, range

- integer: enum, bytes, digits, unsigned

- number: enum, exact, totalDigits, decimalDigits, bytes, double

- object: schema, valueSchema, keepUnsanitized, jsonb

- text: emptyAsNull, enum, noTrim, fixedLength, maxLength

Field Modifiers

Modifiers enhance fields with validation, processing, or generation capabilities:

- Validators:

|~name[(optional_params)]- Validate field values - Processors:

|>name[(optional_params)]- Process field values - Generators:

|=name[(optional_params)]- Generate field values

Example

password : text maxLength(200) |~strongPassword |>hashPassword(@latest.passwordSalt) -- "User password"

passwordSalt : text fixedLength(16) readOnly |=random -- "User password salt"

In this example:

passwordSaltis a read-only field with a randomly generated 16-character stringpasswordis validated withstrongPasswordand processed with a customhashPasswordprocessor

Relationship Definitions

Single Reference (refers to)

// Form 1 - References the primary key of the target entity

refers to <target_entity> [with <condition>] [[as <local_field>] [optional] [default(<value>)] [...modifiers] | [on <local_field>]]

// Form 2 - References a specific field of the target entity

refers to <target_field> of <target_entity> [with <condition>] [[as <local_field>] [optional] [default(<value>)] [...modifiers] | [on <local_field>]]

One-to-Many (hasMany + belongsTo)

// "One" side

belongs to <target_entity> [with <condition>] [[as <local_field>] [optional] [default(<value>)] [...modifiers] | [on <local_field>]]

// "Many" side

has many <target_entity> [being <target_field>]

One-to-One (hasOne + belongsTo)

// "One" side with foreign key

belongs to <target_entity> [with <condition>] [[as <local_field>] [optional] [default(<value>)] [...modifiers] | [on <local_field>]]

// "One" side without foreign key

has one <target_entity> [being <target_field>]

Many-to-Many (hasMany + hasMany)

Many-to-many relationships can be defined either by manually creating a junction table or by letting XEML automatically generate one.

Primary Key (key)

By default, the primary key is the first field or a field described in features (like autoId). You can explicitly specify it with:

key <field_name>

Indexes (index)

Define non-foreign key indexes (foreign keys are automatically added in relationship definitions):

index

<field> [is unique]

"[" <field_array> "]" [is unique]

Remove an inherited index:

index

"-" (<field>|"[" <field_array> "]")

Views

Define common output view dataset definitions:

views

<view_name>

$select

Initialization Data

Define predefined initialization data:

data [<optional_dataset_name>] [in <environment>] [

{ ...key_value_pairs }

]

Example:

data [

{ code: 'PUB', name: 'Public', desc: 'All user can see' },

{ code: 'CNT', name: 'Contact', desc: 'Only your contacts can see' },

{ code: 'PRI', name: 'Private', desc: 'Only yourself can see' }

]

data "test" in "development" [

{ code: 'PUB', name: 'Public', desc: 'All user can see' },

{ code: 'CNT', name: 'Contact', desc: 'Only your contacts can see' },

{ code: 'PRI', name: 'Private', desc: 'Only yourself can see' }

]

Type Definitions

XEML allows defining reusable types:

type

idString : text maxLength(64) emptyAsNull

uuid : text fixedLength(36)

shortName : text maxLength(60) emptyAsNull

// ...

Abstract Entities

Abstract entities serve as templates that can be extended by other entities:

abstract entity dictionaryByAutoId

with

autoId({ startFrom: 100 })

createTimestamp

updateTimestamp

logicalDeletion

has

name

desc

index

name is unique

These abstract entities can be extended to create concrete entities with all the inherited features, fields, and behaviors.

API Schema Documentation

The API schema is defined through YAML files located in the xeml/api directory of an app module. The system processes these files to generate API controllers with proper validation and business logic.

Directory Structure

/xeml/

/api/

__types.yaml # Shared type definitions

__groups.yaml # API group definitions

__responses.yaml # Response definitions

_resource1.default.yaml # Generated default API definitions (can be overridden)

_resource2.default.yaml # Generated default API definitions (can be overridden)

resource1.yaml # Resource API definitions

resource2.yaml # Another resource API definitions

...

Notes:

__types,__groups, and__responsesare special files that are not processed as resources and can be extended from packages configured indataModel.apiExtends.@xgent/xem-basehas already defined some commonly usedtypes,groupsandresponses.

Example dataModel configuration:

dataModel:

schemaPath: './xeml'

schemaSet:

forApi:

dataSource: postgres.forApi

apiExtends:

- '@xgent/xem-base'

dependencies:

commons: '@xgent/xem-commons'

base: '@xgent/xem-base'

Type Definitions (__types.yaml)

This file defines reusable types that can be referenced in API respones using JTMS syntax from @kimit/validators.

# Example __types.yaml

UserCredentials:

type: object

schema:

username:

type: text

post:

- ['~minLength', 3] # post validation for username

password:

type: text

post:

- ['~minLength', 8]

PaginationParams:

type: object

schema:

page:

type: integer

default: 1

pageSize:

type: integer

default: 20

Types can also be parameterized using the $args property:

ListResult:

$args:

- itemType

$base: $type.PaginationResponse # extends from a type from __types

status:

type: 'const'

value: 'ok'

data:

type: array

element:

$extend: $args.itemType # use the itemType parameter

Type References

- Directly use other type

typeOrField: $type.OtherType

- Extend from a type

typeOrField:

$base: $type.OtherType

extraField1: # add more fields

type: text

Group Definitions (__groups.yaml)

This file defines API groups that organize controllers into different directory.

# Example __groups.yaml

users:

moduleSource: project

controllerPath: controllers/users

auth:

moduleSource: project

controllerPath: controllers/auth

Resource API Definitions

Each resource file (e.g., users.yaml) defines one or more resources with their endpoints. The system also generates default resource files (e.g., _users.default.yaml) that provide basic CRUD operations based on the entity's views and datasets.

# Example users.yaml

/users:

description: User management API

group: users

endpoints:

get:

description: List all users

request:

query:

$base: $type.PaginationParams # extends from a type from __types

name:

type: text

optional: true

responses:

200:

description: List of users

implementation:

- $business.users.listUsers_($local.query)

post:

description: Create a new user

request:

body: $type.UserCredentials # directly uses a type from __types

responses:

201:

description: User created

implementation:

- $business.users.createUser_($local.body)

/{id}:

get:

description: Get user by ID

request:

params:

id:

type: integer

responses:

200:

description: User details

implementation:

- $business.users.getUser_($local.id)

Default API Generation

The system automatically generates default API schema files (_<resourceName>.default.yaml) for each entity in the schema. These provide basic CRUD operations:

GET /resource- List all resources (uses entity'slistview if available)POST /resource- Create a new resource (uses entity'snewdataset if available)GET /resource/{id}- Get resource by ID (uses entity'sdetailview if available)PUT /resource/{id}- Update resource by ID (uses entity'supdatedataset if available)DELETE /resource/{id}- Delete resource by ID (uses entity'sdeletedview if available)

If a user-defined <resourceName>.yaml file exists, it takes precedence over the generated default schema.

Default Views and Datasets Resolution

When generating default APIs, the system looks for specific views and datasets:

- For list operations, it checks for a

listview, otherwise uses$default.listView - For detail operations, it checks for a

detailview, otherwise uses$default.detailView - For delete operations, it checks for a

deletedview, otherwise uses$default.deletedView - For create operations, it checks for a

newdataset, otherwise uses$any - For update operations, it checks for an

updatedataset, otherwise uses$any

Schema Components

Base Endpoint Definition

Each resource file contains one or more base endpoints:

/endpoint-path: # base endpoint path

description: Description of the resource

group: groupName

endpoints:

# HTTP methods and their handlers

Endpoint Definition

Each endpoint is defined by HTTP method or sub-routes:

get:

description: Description of the endpoint

request:

# Request validation

responses:

# Response definitions

implementation:

# Business logic implementation

/{projectRef}: # sub-route with parameter :projectRef

# ...endpoints

Request Validation

The request section defines validation for different parts of the request:

request:

headers:

authorization:

type: text

query:

search:

type: text

optional: true

params:

id:

type: integer

body:

$base: $type.SomeType # extends from a type from __types

extraField:

type: text

state:

- user.id

- user.role

Note: request currently supports 5 data sources: headers, query, params, body, and state.

headers: ctx.headersquery: ctx.queryparams: ctx.paramsbody: ctx.request.bodystatectx.state

The schema of data source is the same the __types section using JTMS syntax.

Data References

The system supports several reference types:

- Type References:

$type.TypeName- References a type from__types - Dataset References:

$dataset.EntityName.DatasetName- References a dataset schema - Entity Field References:

$entity.EntityName.FieldName- References an entity field - View References:

$view.EntityName.ViewName- References an entity view - Default References:

$default.viewType- References a default view type:$default.listView- Default list view ($select: ['*'])$default.detailView- Default detail view ($select: ['*'])$default.deletedView- Default deleted view ($select: ['key'])

- Any Data:

$any- Accepts any data (used when no specific dataset is defined)

Implementation

The implementation section defines the business logic to execute:

implementation:

- $business.serviceName.methodName($local.param1, $local.param2)

- $entity.EntityName.methodName($local.param, $view.EntityName.ViewName)

Business methods can be synchronous or asynchronous (with a trailing underscore).

Entity method calls use the following pattern:

$entity.EntityName.methodName(arguments...)

Supported entity methods include:

findOne_- Find a single entityfindManyByPage_- Find entities with paginationcreate_- Create a new entityupdateOne_- Update an entitydeleteOne_- Delete an entity

When using view references in entity methods, you can optionally provide a $where parameter:

$view.EntityName.ViewName($local.filter)

This will be translated to:

{ $where: filter, $view: 'ViewName' }

For methods that return modified entities (create, update, delete), the view reference is translated differently:

- For

create_:{ $getCreated: { $view: 'ViewName' } } - For

updateOne_:{ $getUpdated: { $view: 'ViewName' } } - For

deleteOne_:{ $getDeleted: { $view: 'ViewName' } }

All business methods should return an object containing result and payload, i.e. { result, payload }.

Code Generation

The system generates:

- Default API schema files (

_<resourceName>.default.yaml) for each entity - API controller classes for each resource (either from user-defined or default schemas)

- Index files for each group that exports all controllers

Controller Method Mapping

HTTP methods in API schema files map to controller methods as follows:

Base routes:

GET /resource→query_POST /resource→post_PUT /resource→putMany_PATCH /resource→patchMany_DELETE /resource→deleteMany_

Sub-routes with parameters:

GET /resource/{id}→get_PUT /resource/{id}→put_PATCH /resource/{id}→patch_DELETE /resource/{id}→delete_

Special Features

Sub-Routes with Parameters

Routes with parameters are defined using the /{paramName} syntax:

/{id}:

get:

# Get by ID endpoint

put:

# Update by ID endpoint

delete:

# Delete by ID endpoint

Request Data Processing

The system supports:

- Base Types: Using

$baseto extend existing types - Type Specialization: Using parameterized types

- Field Validation: Using

@kimit/validatorsto do validation

Response Handling

All endpoints use a standard response format through the send method:

this.send(ctx, result, payload);

Common Mistakes

@kitmi/data

Operation result

For historic compatibility reasons, all DB operations except findOne_ return an object containing the result data and the affectedRows, while findOne_ directly returns the result data.

For examples:

- findOne_

// find one

const targetApp = await UserAuthorizedApp.findOne_({

$select: ['id'],

$where: {

user: user.id,

app: appId,

},

});

- others

// updateOne_ with get updated

const { data: session } = await UserSession.updateOne_(..., { $getUpdated: true });

// findMany_

const { data: apps } = await App.findMany_({ $view: 'appListItem', $relation: ['users'], $where: where });

// findManyByPage_

const { data, totalCount } = await Project.findManyByPage_({ $view: 'listItem', $where: where }, page, records);

Query options

- $relation: no tailing

s

Transactions

When an EntityModel is referenced within parallel loops, implicit transactions within the EntityModel can cause the EntityModel’s connection to be taken over by the transaction, thus affecting other parallel EntityModel references. The correct handling methods include:

- Always use db.entity('EntityName') to create a new EntityModel instance. This ensures that even if a dynamically created EntityModel within parallel logic becomes transactional, it won’t affect other parallel calls.

- It is recommended to avoid using implicit or explicit transactional calls within concurrency. Instead, convert parallel execution into sequential execution.